I've slowly been learning bits of bash and various related Unix utilities that are useful for processing text files - like the copious log files FreeRADIUS spits out with all sorts of useful information. I like "just in time" learning - it's often the only learning I have time for...

|

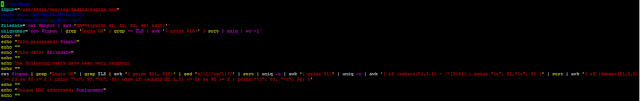

| A screenshot of a bash shell script. |

Looking through currently connected clients where more than n clients are simultaneously connected is a fool's errand, even if your wireless system has such a view (UniFi has one) - and it won't catch people who break the rules over the course of a day, or when you're not actively looking, or across two different manufacturers of Access Points. A 24 hour long RADIUS logfile record on the other hand... Well, that has a lot of potential.

Of course, processing of this kind is quite easily accomplished in a simple shell script...

Have a look at a logfile you're interested in doing something with. In my scenario, it was radius.log.

If I issue cat radius.log in the correct directory (/usr/local/var/log/radius/), I'll see the contents of the file (rapidly) scroll past.

(Hint: if you actually want to read the whole file, particularly a big file longer than your scroll buffer, try less <filelane>, or open it in a text editor like vi, vim, nano or emacs). less seems better than more (which will let you see the contents a screen at a time, but you can't scroll back so easily)!

Mine has thousands of lines that look like:

(I've removed real data from the above example - [USER] has a username in it, and [MAC] represents a MAC address).Tue Feb 6 10:40:47 2018 : Auth: (11689929) Login OK: [USER] (from client kcwifi port 0 cli [MAC] via TLS tunnel)Tue Feb 6 10:40:47 2018 : Auth: (11689929) Login OK: [USER] (from client kcwifi port 0 cli [MAC])

Obviously, one is the tunneled RADIUS event, and the other is the untunneled one. You'll see in one line, we have all the info we want - a username and a MAC address - to answer our questions. But which should we use? The one with the TLS tunnel doesn't have a trailing ")" on it, which is useful (you could get rid of it with something like awk or sed, but why not just pick the "cleanest" output with all the info you need?). I actually did that first, but then noticed the cleaner output was possible by selecting the other line.

There will also be other messages occasionally, which you'll want to filter out. The easiest way to get what you want is to filter out everything EXCEPT what you want - by only selecting what you DO want.

One of the joys of bash scripting is that your CLI is also usually bash, so you can experiment from the command line until you're happy, and then wrap it up nicely in a shell script.

You're going to make a lot of use of pipes - | - to hand off processed text to the next step.

Start out with

cat radius.log | grep 'Login OK'If you've not used it, cat basically prints out the text of a file to screen (stdout); grep searches for things matching a pattern - it's super-useful. A very handy command I use quite often is ps aux | grep <program name> when I'm trying to find out whether a process is running or not (and, if I want to kill it, what its PID is).

This will only output lines with a successful login (obviously, you can pick something else if you're interested in errors! - grep -v shows the inverse i.e. "not <searchterm>").

That's still twice as many records as we need, so we'll pick out the lines that say TLS in them:

cat radius.log | grep 'Login OK' | grep TLSNow we have one event for each successful login.

There's still way more info than I want - I just want a clean username and a MAC address.

awk is a tremendously useful program for processing text - it's pretty much designed for doing this quickly, and probably grew out of a sysadmin needing to quickly process text files (pretty much everything in Unix is a text file, by design). One of its useful functions is grabbing space delimited text. It turns out in my output, the 11th and 18th space delimited columns have the username and MAC I'm interested in:

cat radius.log | grep 'Login OK' | grep TLS | awk '{print $11, $18}'Now I have a list of every single username and MAC; unfortunately, the username has square brackets around it.

Another "old" Unix utility is pretty good at changing things on the fly: sed.

cat radius.log| grep 'Login OK' | grep TLS | awk '{ print $11, $18}' | sed 's/\[//;s/\]//'This will strip out the square brackets, leaving two columns - a clean username and a hyphen separated version of ALL UPPERCASE MAC address - which is fine for my use.

You could change the format here if you wanted to (to Windows all lowercase with no delimiting, or to the more familiar colon separated version). Learning to do this yourself by hacking at the output will start you on your way to learning these tools - and is exactly how I build up this little "utility".

Now we want to get down to unique instances of each pair.

To start with, let's sort them (this step is not strictly necessary [actually, further reading strongly suggests this is a good idea, because of how uniq works; one might also try sort -u], but it makes it easier to see what's going on, and if it "looks right" - surprisingly, this greatly decreases execution time later).

cat radius.log | grep 'Login OK' | grep TLS | awk '{ print $11, $18}' | sed 's/\[//;s/\]//' | sortHey presto, a sorted list with lots of duplicates. Guess what, there's a utility specifically for finding unique things, called uniq. Here, you need to handle users not respecting your case expectations (RADIUS and Windows auth don't care, uniq does) 27bobb is also 27BobB - same user, but not the same to uniq, unless you tell it to ignore case.

cat radius.log | grep 'Login OK' | grep TLS | awk '{ print $11, $18}' | sed 's/\[//;s/\]//' | sort | uniq -iNow we have a list of unique MAC by username. Great. But I don't want to count - I want to just know whether or not a user has broken the rules. Firstly, we don't actually need the MAC addresses anymore. Dump them with awk by only selecting the first (username) space delimited column.

cat radius.log | grep 'Login OK' | grep TLS | awk '{ print $11, $18}' | sed 's/\[//;s/\]//' | sort | uniq -i | awk '{ print $1}'And now, how many per user? Turns out uniq has another trick - counting things.

cat radius.log | grep 'Login OK' | grep TLS | awk '{ print $11, $18}' | sed 's/\[//;s/\]//' | sort | uniq -i | awk '{ print $1}' | uniq -cNow we have a list with a count of unique MAC addresses per username.

Now I only care about students, and they have a useful property compared with all other logins - they start with two numbers (all other users start with letters). This means I can get awk to only print out entries that match that pattern, along with the unique MAC count.

cat radius.log | grep 'Login OK' | grep TLS | awk '{ print $11, $18}' | sed 's/\[//;s/\]//' | sort | uniq -i | awk '{ print $1}' | uniq -c | awk '{ if (substr($2,1,1) ~ /^[0-9]/ ) print "\t", $2,"\t", $1 }'

You'll notice there's a regular expression there that matches any numeral, and the awk basically says "look at the first two characters, if they match this pattern, print out the line in this order, else discard".

I then have another (unnecessary) sort, mainly so I can check my work (looking for users that haven't collapsed down to a single instance, for example).

cat radius.log | grep 'Login OK' | grep TLS | awk '{ print $11, $18}' | sed 's/\[//;s/\]//' | sort | uniq -i | awk '{ print $1}' | uniq -c | awk '{ if (substr($2,1,1) ~ /^[0-9]/ ) print "\t", $2,"\t", $1 }' | sort

I also prefer to see username before count, so I've swapped them around in the output. Notice the $<numeral> syntax for dealing with space separated "columns".

Now things get complex, as I have a number of conditions. The numbers actually tell me what grade you're in (they are the last two numerals of last year you're in high school). There are different rules depending on your grade - this used to be more complex, as there were older pupils who were only allowed one, but we now allow all high school pupils up to two devices (unique MACs), and all junior pupils are allowed up to one (unique MAC).

So we need some conditional awk. It's not pretty, but it works.

If you know that everyone in junior school has the number 23 or above in their username, and everyone in high school has 22 or less, it's pretty easy. (You could do some basic math to work this out based on current year and have a variable that changes and is inserted into the right spots, so it's always accurate, but I just edit the two numbers each year - this is particularly useful if you get to the point of creating a little shell script file you can run whenever).

Basically it says:

"If the username in column 2 is 23 or above and the count is 2 or more, print it out in the order <username> <count> (again, reversing the order to my preferred order); else, if the username is less than or equal to 22 and the count is 3 or more, print it out (again, reversing the input order). Anything that doesn't match either of these conditions hasn't broken the rules and is tossed out.

cat radius.log | grep 'Login OK' | grep TLS | awk '{ print $11, $18}' | sed 's/\[//;s/\]//' | sort | uniq -i | awk '{ print $1}' | uniq -c | awk '{ if (substr($2,1,1) ~ /^[0-9]/ ) print "\t", $2,"\t", $1 }' | sort | awk '{ if (substr($1,1,2) >= 23 && $2 >= 2 ) print "\t", $1,"\t", $2; else if (substr($1,1,2) <= 22 && $2 >= 3 ) print "\t", $1, "\t", $2; }'There's a lot of joy to building up a chunk of text like this that does something useful.

To get a count of unique MAC addresses that are correctly authenticated is much simpler:

cat radius.log | grep 'Login OK' | grep -v TLS | awk '{ print $18}' | sort | uniq | wc -lOf course, what's really nice is a basic report that does a bunch of processing and outputs a neat summary of the trouble-makers. Putting this into a file starting with a shebang with a .sh extension and chmod +x the file makes an executable script you can call at any time with ./<scriptname>.sh

Here's mine:

#!/bin/bashThis outputs information like date, a formatted list of naughty users and unique MAC address count. It should be very easy for you to modify for your environment and needs.

input="/usr/local/var/log/radius/radius.log"

filedate=`cat $input | awk 'NR==1{print $1, $2, $3, $5; exit}'`

uniquemac=`cat $input | grep 'Login OK' | grep -v TLS | awk '{ print $18}' | sort | uniq | wc -l`

echo ""

echo "File processed: $input"

echo ""

echo "File date: $filedate"

echo ""

echo The following users have been very naughty:

echo ""

cat $input | grep 'Login OK' | grep TLS | awk '{ print $11, $18}' | sed 's/\[//;s/\]//' | sort | uniq -i | awk '{ print $1}' | uniq -c | awk '{ if (substr($2,1,1) ~ /^[0-9]/ ) print "\t", $2,"\t", $1 }' | sort | awk '{ if (substr($1,1,2) >= 23 && $2 >= 2 ) print "\t", $1,"\t", $2; else if (substr($1,1,2) <= 22 && $2 >= 3 ) print "\t", $1, "\t", $2; }'

echo ""

echo "Unique MAC addresses: $uniquemac"

echo ""

Another thing that is useful to learn is how to pass an argument from a command line to a script. For example, say a pupil would like to know what MAC addresses are being naughty, it would be good for you to be able to pass the username and get a script to spit out a list of unique MACs associated with that username. Of course, with more work, you could probably further modify the script above to include a report of the infringing MAC addresses associated with each currentnaughtykid, but that's more complicated than I needed, so I've not done it.

Here's a script that takes an argument of username from the commandline and spits out associated unique MAC addresses. You'll see the username=$1 line, which is bash for "take the first argument passed in the command line and set this variable to it"; that variable is later called in the line that processes the RADIUS logfile:

#!/bin/bash

filename="/usr/local/var/log/radius/radius.log"

username=$1

echo "$username has used the following unique MAC addresses in $filename:"

cat $filename | grep 'Login OK' | grep TLS | grep $username | awk '{print $18}' | sort -u

This then quickly spits out all the unique MAC addresses associated with that username. You can tart it up with various formatting and spacing options, but it's a quick tool to answer a question that used to be a bit more... painful.

I prefer this to the alternative, which is to call for user input after execution, which you can also do:

#!/bin/bash

filename="/usr/local/var/log/radius/radius.log"

echo "Please type the username you want unique MAC addresses for from $filename..."

read username

echo "$username has used the following unique MAC addresses:"

cat $filename | grep 'Login OK' | grep TLS | grep $username | awk '{print $18}' | sort -u

You may have noticed I've called the filename as a variable - which means you can point it at other files (say you want to process the one from yesterday...).

I have no doubt there are (much) more elegant ways of achieving these features (there are, for instance, some arguably unnecessary sorts, and you could probably collapse some of the editing and selection steps with more complex regex) - there are certainly plenty of different utilities you could use within bash to the same end, or you could even write programs in perl or python etc. - and purists would say "you could do your grep selections in awk or sed"... Getting better is always a good aim, but getting somewhere is still useful, even if it's not pretty or elegant.

But this works - and developing a useful tool quickly which things you already know is often better than over-complicating something like parsing a logfile for an answer to a frequently asked question like "who is breaking the rules today"? Of course, it's also a useful first step in more advanced file processing and automation - a little work now will save a lot of work over time. And eventually, you'll learn what bash scripting wizards know, and adopt those better practices.

Of course, once you learn a couple of these commands, you'll likely think of other things you could usefully parse for information to questions you commonly ask...

A useful exercise for the reader: for bonus points: work out how to trigger such scripts from cron, and get the cronjob to email the results to you! Trigger it on yesterday's file, rolled over by logrotate.

One should be aware that particularly savvy users of bash will probably frown at "useless use of cat" - there are other ways of getting a file into a script that don't invoke cat (which is actually designed to concatenate multiple files out, but many have gotten used to it as a command to easily display the contents of a file).

ReplyDeleteSee e.g. https://web.archive.org/web/20160711205930/http://porkmail.org/era/unix/award.html for a discussion.

I've just discovered the zcat command, which can read Gzipped files (which logrotate will often create to save disk space) - this is extremely useful for handling logfiles, and you too should know it exists. :)

ReplyDeletesee also https://en.wikipedia.org/wiki/Gzip#zcat

Also, find out about sort -k. It's magical.

ReplyDelete